In contrast to the science fiction portrayal of evil computers plotting to overthrow humankind, artificial intelligence (AI) in fact seems poised to help improve human health in a multitude of ways, including flagging suspicious moles for dermatologist follow-up, monitoring blood volume in military field personnel and tracking flu outbreaks via Twitter.

The Colorado Clinical and Translational Science Institute (CCTSI) recently held the 7th annual CU-CSU Summit on the topic of “AI and Machine Learning in Biomedical Research”, with over 150 researchers, clinicians and student attendees from all three CU campuses and CSU.

Ronald Sokol, MD, CCTSI director, said, “The purpose of the CCTSI is to accelerate and catalyze translating discoveries into better patient care and population health by bringing together expertise from all our partners.” Rather than individual campuses operating in silos, the annual Summit brings together clinicians, basic and clinical researchers, post-doctoral fellows, mathematicians and others to highlight ongoing research excellence, establish collaborations and increase interconnectivity of the four campuses.

This year’s conference on AI hit capacity for registration, including attendance by more mathematicians and with more poster submissions than the preceding six events. “The topic of AI in research is everywhere. No one knows exactly what is going to happen,” Sokol said, referencing the many privacy and ethics concerns about AI use in research. “I’m here to learn too – I’m not sure I understand it all.”

Living up to the ‘hype’

Lawrence Hunter, director of the Computational Bioscience program at CU Anschutz, framed AI as having the potential to change the way people practice medicine. “There’s a lot of hype, so we need to be careful how we talk about it,” Hunter said.

What, specifically, is AI doing for biomedical research in Colorado? Michael Paul, PhD, assistant professor of Information Science from CU Boulder, uses social monitoring through sites like Twitter and Google to track and predict public health, including yearly flu rates and Zika virus outbreaks. The Centers for Disease Control (CDC) is typically considered the gold standard for public health information, but Paul points out that CDC data is always at least two weeks behind. In contrast, Google Trends provides a daily population snapshot: as a fictional example, ‘1 million people in Colorado searched for ‘flu symptoms on September 15, 2019’.

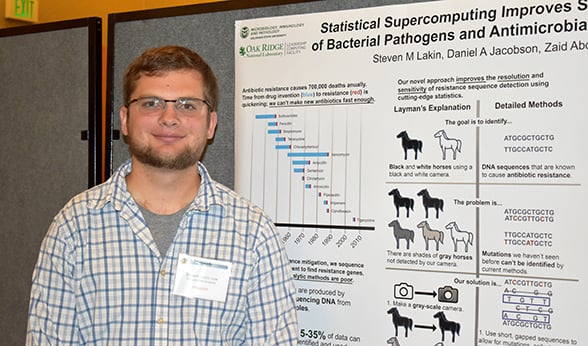

Steven Lakin, a CSU veterinary medicine student, displays his supercomputing research project at the CU-CSU Summit.

Social media sources like Twitter can be mined for tweets containing terms like ‘flu’ within a specific geographic area or demographic group of interest. Using Twitter, researchers can distinguish between “I have the flu” vs. “I hope I don’t get the flu”, whereas Google data cannot make this distinction.

Steve Moulton, MD, trauma surgeon, director of Trauma and Burn Services at Children's Hospital Colorado and CU School of Medicine and co-founder of Flashback Technologies, Inc, used a machine learning system originally designed to help robots navigate unfamiliar terrain in outdoor, unstructured environments to create a new patented handheld medical device called the CipherOx, which was granted FDA clearance in 2018.

The CipherOx, developed in partnership with the Defense Advanced Research Projects Agency (DARPA) from the United States Department of Defense, monitors heart rate and oxygen saturation and estimates blood volume through a new AI-calculated number called the compensatory reserve index (CRI), which indicates how close a patient is to going into shock due to blood loss or dehydration. While designed to be used in military field operations, the CipherOx can also be used to monitor patients en route to the hospital and postpartum women. Of note, Moulton’s pilot studies were funded by the CCTSI.

AI basics

AI use in machine learning can be broken into three broad categories: supervised, unsupervised and reinforcement learning. In supervised learning, AI systems learn by being trained to make decisions. For example, in 2016 Google developed an AI-based tool to help ophthalmologists identify patients at risk for a diabetes complication known as diabetic retinopathy that can result in blindness. The Google algorithm learned from a set of images diagnosed by board-certified ophthalmologists and built a set of criteria for making yes vs. no decisions.

In unsupervised learning, AI relies on probabilities to evaluate complex datasets; predictive text on your cellphone is an example of this. In biomedical research, an example of unsupervised learning is using AI to analyze drug labels to find common safety concerns among drugs that treat similar conditions.

Finally, reinforcement learning, like Google’s AlphaZero, the world’s best machine chess player, allows AI to try a lot of options to maximize reward while minimizing a penalty. In reinforcement learning, an AI program can fully explore a hypothetical space without causing trouble. Reinforcement learning in biomedical research can be useful when AI is given a narrow range of choices, for example, predicting best patient response within a narrow range of possible drug doses.

Human mistakes vs. AI mistakes

According to Lawrence Hunter from CU Anschutz, a major problem with AI in healthcare is not proving how good AI is, but paying attention to where it fails. “With 92% correct AI, that gives us confidence that the system is accurate, but we have to be really careful about the other 8% because the kinds of errors AI makes are different (and can be more severe) than the kinds of errors humans make,” he said.

Matt DeCamp, associate professor with the Center for Bioethics and Humanities from CU Anschutz, gave an example of this phenomenon: when AI was used to classify pictures, a picture of a dragonfly was alternatively identified as a skunk, sea lion, banana and mitten. “Some mistakes are easily detected (dragonfly doesn’t equal sea lion),” DeCamp said, “but other mistakes closer to the realm of reasonable may challenge how risks are evaluated by Institutional Review Boards (IRB),” the panels of scientists and clinicians responsible for evaluating patient risks in clinical trials.

THE AI LANDSCAPE |

|

Matt DeCamp, associate professor with the Center for Bioethics and Humanities from CU Anschutz, framed the AI landscape:

|

In examples like Google’s system for helping ophthalmologists catch patients at risk of blindness, AI has been heralded as increasing patient access, particularly in rural areas and for patients with limited mobility, and decreasing costs for providers and hospitals. While potential for using AI to improve human health is high, DeCamp echoed Hunter’s comments and cautioned against automatic acceptance of AI superiority. “It’s possible that an AI system could be better on average, but remember that being better on average can obscure systematic biases for different subpopulations. And that is an issue of justice.”

Challenges and concerns

Some issues relative to AI use in biomedical research involve patient privacy. For example, a lawsuit made headlines this summer when a patient at the University of Chicago claimed that his privacy was violated in breach of contract and consumer protection law as a result of data sharing between the university and Google. Michael Paul from CU Boulder said that recent studies regarding use of recreational drug brings up obvious concerns about how to balance public health research with privacy, since Twitter exists in a public space.

Truly informed consent is also an ethical concern, given the ‘black box’ nature of AI algorithms. DeCamp from CU Anschutz clarified, “Black box, meaning that the algorithmic workings are not only unknown, but may be in principle unknowable.”

Just because we can, should we?

Matt DeCamp said that as an ethicist, AI raises big questions. “What is an appropriate use of AI in the first place? Just because we can, does that mean we should? For example, there’s interest in developing robot caregivers. Should we? Would computer-generated poetry be ‘real’ poetry?” Patients may fear further de-personalization of health care in a system that can already seem impersonal at times.

Long-lasting effects of AI are even more uncertain. Will AI change the way we think or act toward each other? DeCamp highlighted research from sociologist Sherry Turkle, PhD, from the Massachusetts Institute of Technology that validates this possibility. In summary of Turkle’s research, DeCamp said, “Computers don’t just change what we do, but also what we think.”

Guest contributor: Shawna Matthews, a CU Anschutz postdoc