Casey Greene, PhD, chair of the University of Colorado School of Medicine’s Department of Biomedical Informatics, is working toward a future of “serendipity” in healthcare – using artificial intelligence (AI) to help doctors receive the right information at the right time to make the best decision for a patient.

Finding that serendipity begins with the data. Greene said the Department’s faculty works with data ranging from genomic-sequencing information, cell imaging, and electronic health records. Each area has its own robust constraints – ethical and privacy protections – to ensure that the data are being used in accordance with people’s wishes.

His team uses petabytes of sequencing data that are available to anyone, Greene said. “I think it’s empowering,” he said, noting that anyone with an internet connection can conduct scientific research.

Following the selection or creation of a data set, Greene and other AI researchers at the CU Anschutz Medical Campus begin the core focus of AI work – building algorithms and programs that can detect patterns. The goal is to find links in these large data sets that ultimately offer better treatments for patients. Still, human insight brings essential perspectives to the research, Greene said.

“The algorithms do learn patterns, but they can be very different patterns – and can become confused in interesting ways,” he said. Greene used a hypothetical example of sheep and hillsides, two things often seen together. Researchers must teach the program to separate the two items, he said.

“A person can look at a hillside and see sheep and recognize sheep. They can also see a sheep somewhere unexpected and realize that the sheep is out of place. But these algorithms don't necessarily distinguish between sheep and hillsides at first because people usually take pictures of sheep on hillsides. They don't often take pictures of sheep at the grocery store, so these algorithms can start to predict that all hillsides have sheep,” Greene said.

“It's a little bit esoteric when you're thinking about hillsides and sheep,” he said. “But it matters a lot more if you're having algorithms that look at medical images where you'd like to predict in the same way that a human would predict – based on the content of the image and not based on the surroundings.” Encoding prior human knowledge (“knowledge engineering”) into these systems can lead to better healthcare down the line, Greene said.

And when it comes to AI in healthcare, Greene said it is key to have open models and diverse teams doing the work. “It gives others a chance to probe these models with their own questions. And I think that leads to more trust.”

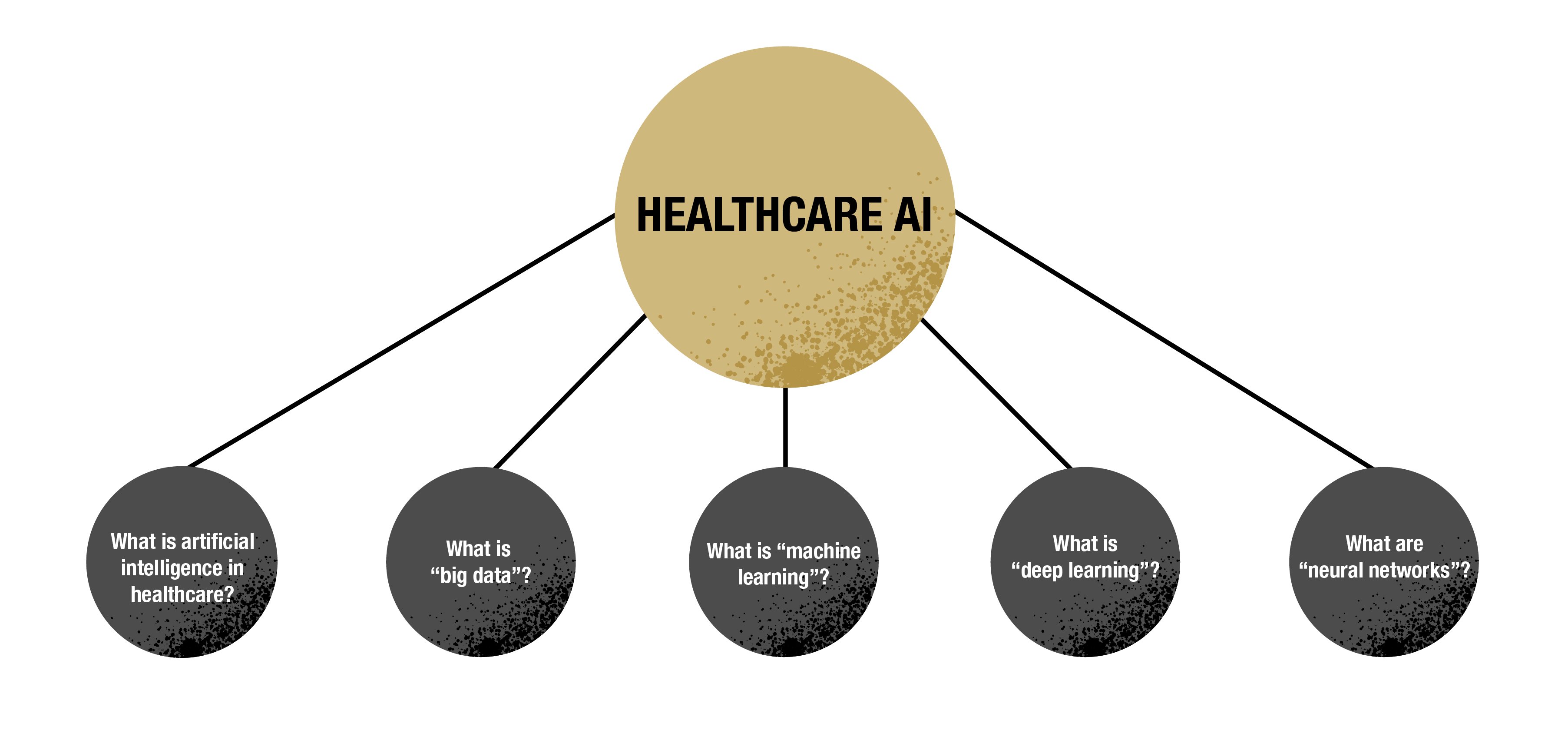

In the Q&A below, Greene provides a general overview of the terms and technology behind AI alongside the challenges he and his fellow researchers face.