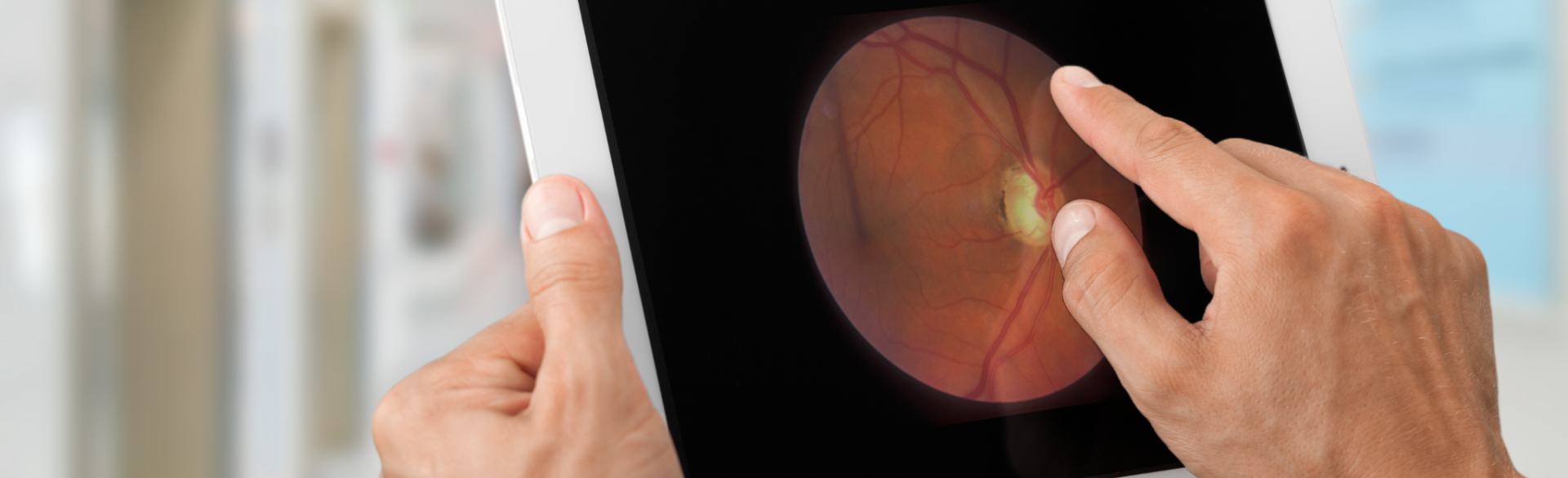

Large language models (LLMs), such as ChatGPT, have skyrocketed in popularity in the last year due to their ability to utilize vast amounts of information, but could they be used to diagnose ocular disease?

Ophthalmology researchers have begun digging into the question. Earlier this year, Malik Kahook, MD, professor in the Department of Ophthalmology and the Slater Family Endowed Chair in Ophthalmology at the University of Colorado School of Medicine, joined researchers at the University of Tennessee Memphis to test the accuracy of ChatGPT compared to senior ophthalmology residents.

In the study, published in September in the journal Ophthalmology and Therapy, researchers input detailed text case reports of 11 clinical scenarios with primary and secondary glaucoma from a public database into ChatGPT and asked for a diagnosis. The same case information was presented to three senior ophthalmology residents for comparison.

The results?

ChatGPT gave a correct diagnosis in eight of the 11 cases. The three resident ophthalmologists were correct in 6, 8, and 8 cases, respectively. In cases with common glaucoma presentation, the residents and ChatGPT were able to give an accurate diagnosis, but cases considered atypical or complex were less accurate for both the LLM and residents.

The team of researchers concluded that ChatGPT’s accuracy was comparable to the residents, and “with further development, ChatGPT may have the potential to be used in clinical care settings, such as primary care offices, for triaging and in eye care clinical practices to provide objective and quick diagnoses of patients with glaucoma.”

The researchers also say the technology still has deficiencies, like needing detailed and structured information that might not always be available in real-world settings.

Here’s how Kahook explains the future of LLMs in ophthalmology and glaucoma diagnosis as the technology continues to evolve.

.png)